You might have seen my previous post on building out a Jenkins server on AWS. Towards the end, I mentioned some of the various things you can do during the course of your build and post-build steps for your particular project. While there’s lots of different directions to go, one of the most common use-cases was pushing your changes to a Force.com instance for merging and running unit tests.

There’s a catch though: you don’t always want to push everything in a development org to a testing environment. Think about the following scenario:

Kevin is changing File A

Dave is changing File B

Evan is changing File C

Nick is changing File D

Now I do a commit and push on file A. Jenkins clones my repo, runs my ant task, and pushes my file A to the testing instance, along with everything else in my package.xml

If Nick now does a commit and push, he’ll be committing both his changed file D, but also his unchanged file A (unless he does a fetch from the merged or my branch first, unlikely), so Jenkins will use his package.xml and send his unchanged file A up to the testing instance, overwriting my hard work, and also ensuring his file D changes are not tested with my file A changes. This is, obviously, a problem.

There are a couple of possible solutions though:

1. Nick could maintain his own package.xml that only includes the files he’s working on. It relies on each developer to hand maintain their own package.xml, and makes everybody responsible for the integrity of the build process, a precarious scenario. Not to mention that when Nick goes to merge his branch, his package.xml would be out of date with the rest of the package, so he’d need to remember to revise it prior to committing. Ugly, ugly, ugly.

2. Nick could keep package.xml out of source control completely. This has the advantage of avoiding the merge scenario described above, but Nick would still need to maintain that file by hand. I’m also a proponent of keeping package.xml in source. Afterall, its literally the manifest of what’s important to your particular project.

3. Jenkins could be smart enough to know what files changes in a set of commits, custom build a package.xml based on those files, do its deploy, then return the original package.xml back in place.

Needless to say, we’re going with option 3, and I wanted to share our approach.

First thing we looked at was the best way to get the file diffs from Github. There were two different techniques I tested out. First, you can intercept the JSON payload that Github sends to the Jenkins web-hook and pass it to your build as a parameter. This actually was the first direction I went, there’s a solid article on it here. You then take that JSON payload and parse it with a command line utility, something like sed could work if you’re looking to torture yourself, or you could also use a utility like jsawk, which allows you to parse JSON using JS from the shell. My challenge was getting the spidermonkey engine on which jsawk depends up and running alongside Ubuntu’s rhino engine. Eventually, I realized I was mucking around too much in config files, and elected to try a different route.

The next direction I went was using git to look at the diff between my commits. The only challenge here was the need to maintain the most recent commit ID from Github, so I knew which two commits to search between. The command I came up with to view the diff file names was:

git diff-tree --no-commit-id --name-only -r $LCOMMIT $PREVRSA

Where LCOMMIT was the name of the most recent commit, while PREVRSA contains the RSA hash for the last commit to the build. Once that diff information was in hand, we could build a script to extract it, parse it, and then build a new package.xml based on that code. The gist below with the script uses the xmlstarlet parser, which you can get on an Ubuntu instance with:

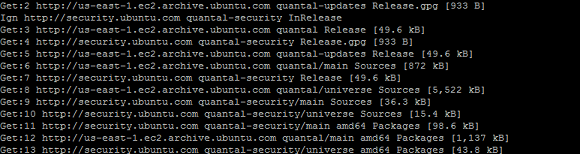

sudo apt-get install xmlstarlet

You’ll also need to make sure your Jenkins user is permissioned properly to operate on the Jenkins workspace (/var/lib/jenkins/workspace) as it will need to read, write and execute files there. I also created two seperate directories in the workspace, one called ‘scripts’ to hold any scripts I use. (You’ll also need a post build script to copy the original package.xml.bak file back over your custom package.xml) I also created one called lastcommit that contains text files with the RSA for the last commit for each project, in the name format of

Finally, to call this script from Jenkins (assuming the script is stored as: /var/lib/jenkins/workspace/scripts/head_pkg_builder.sh), you need to use some of the baked-in Jenkins environment variables. To make the call in project config, add a new ‘Execute Shell’ build step, with the following call:

bash /var/lib/jenkins/workspace/scripts/head_pkg_builder.sh -b $JOB_NAME -w $WORKSPACE -l $GIT_COMMIT

Currently the script can build for changes in the following metadata objects, it would be trivial to add new ones:

Classes

VF Pages

Components

Triggers

Custom Apps

Custom Labels

Objects

Custom Tabs

Static Resources

Workflow

Remote Site Settings

Page Layouts

Feel free to post any questions. Good luck, and happy building.